How tactile exhibits become living stores of knowledge

Digitisation guided the museums through the stormy times of the lockdown.

The fact that the exhibits were visible online despite the closed doors was well received and the virtual view into the depots partly compensated for the missing experience of a live visit. But even if museums open again and people can enjoy looking at art, discovering history and taking part in shaping the museum visit – the offer for people with disabilities remains expandable.

As places of democratic knowledge transfer and social discourse, museums can make an important contribution to greater empathy and visibility by exploiting the enormous potential of “Design-For-All” and breaking new ground in communication.

Hands-on exhibits play a crucial role here

Because people with visual impairments lack tactile offers, although high-performance 3D scanners can be used to create replicas that are true to the original at low cost and in high quality. Even reflective or sensitive surfaces can be easily scanned and reproduced in high-quality CORIAN® or PU block material.

A supplementary audio feedback turns a tactile exhibit into a multi-sense exhibit: because people learn more easily through 2-sense experiences. The audio feedback is triggered by touching sensory points on the exhibit, it plays exact content and thus enables independent exploration of content.

Switching a device on or off with one touch is something we encounter everywhere in everyday life: coffee machines, light switches and telephones make our lives easier with sensor technology.

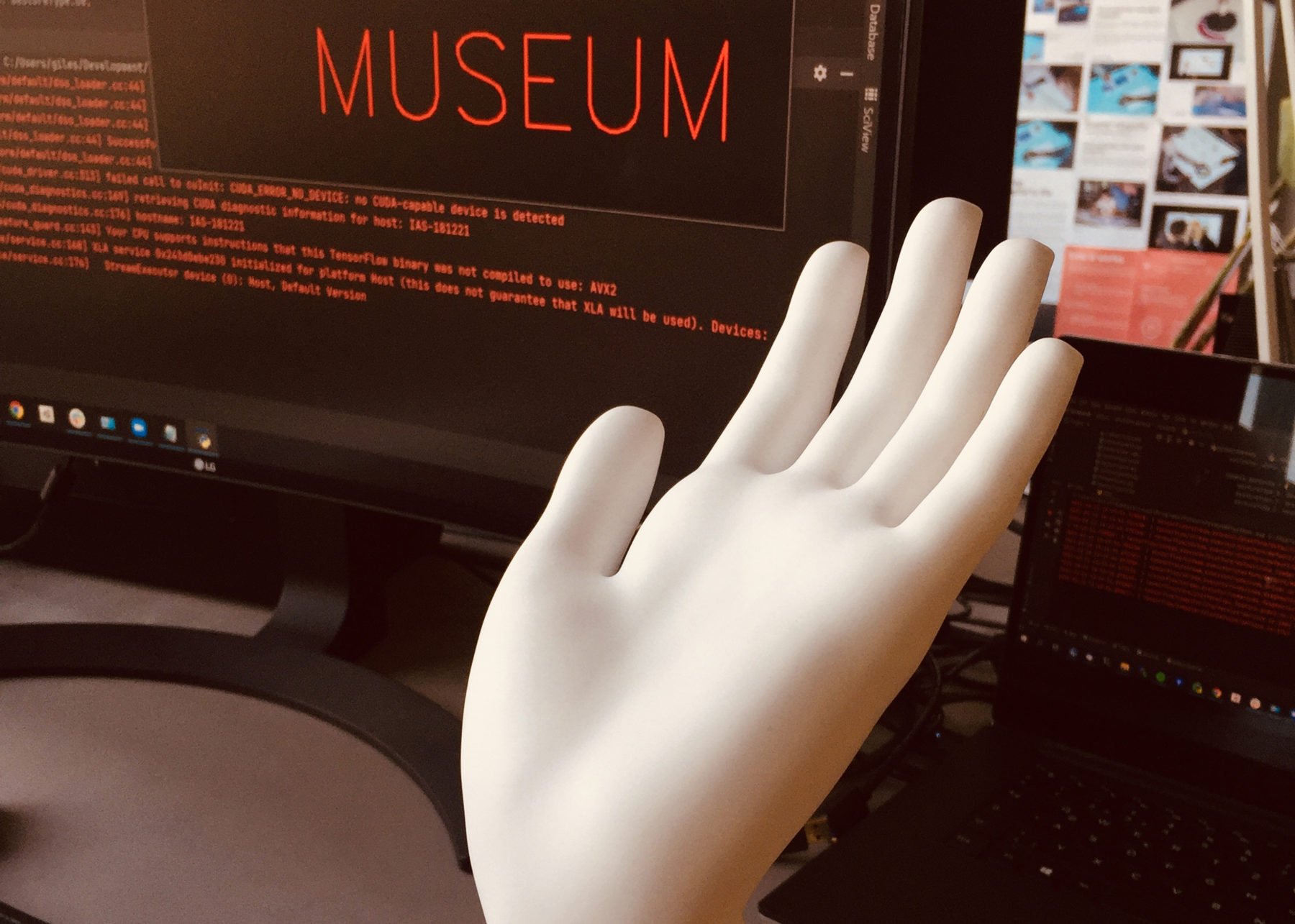

The generation smartphone taps and swipes across digital surfaces and has long since internalised the associated man-machine gestures. But how can gestures control content in a museum tactile exhibit?

The cost of the technical effort and the size of the exhibit have so far limited the possibilities of gesture recognition: one sensor point played out exactly one piece of information. Dead-end?

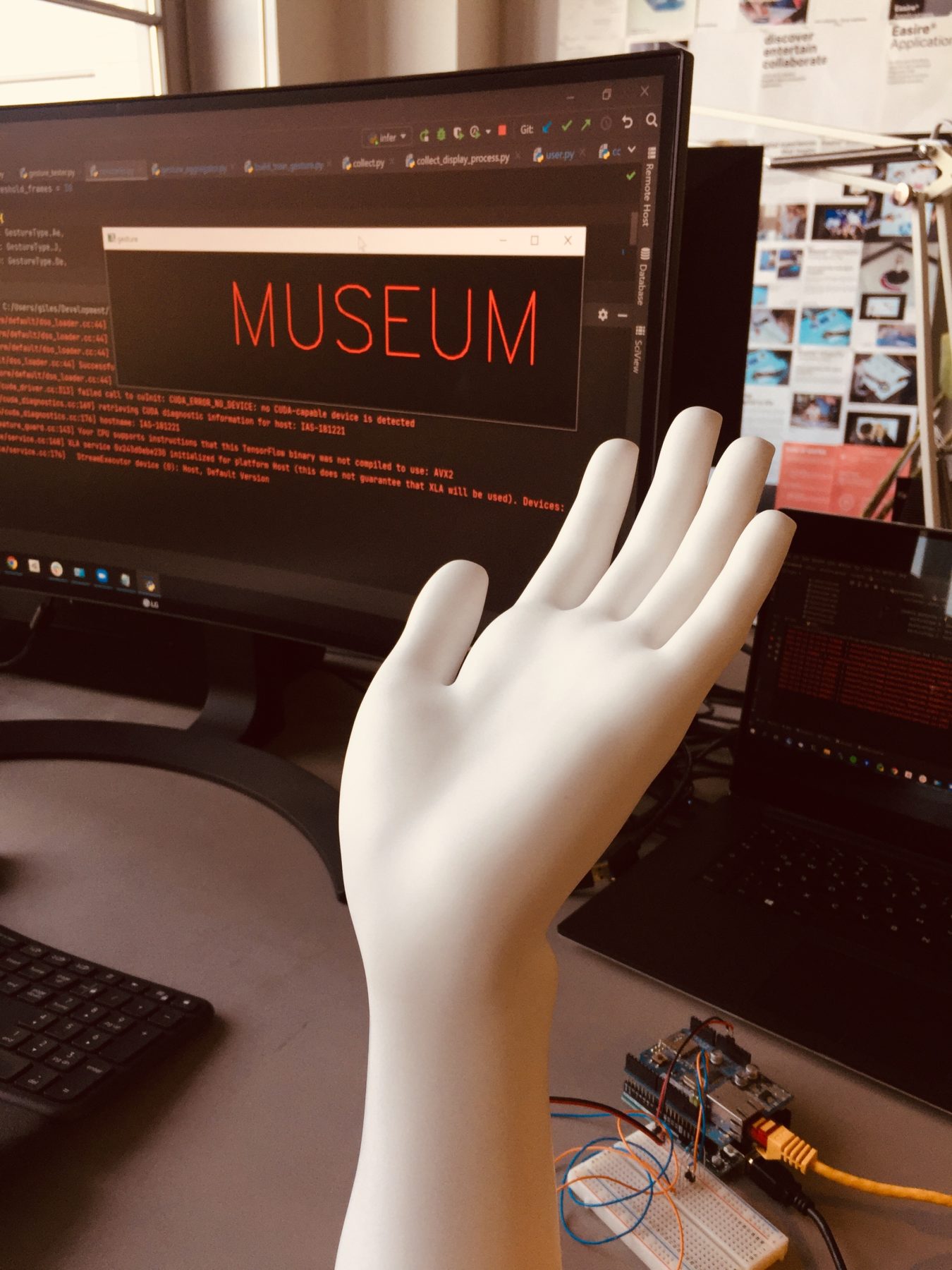

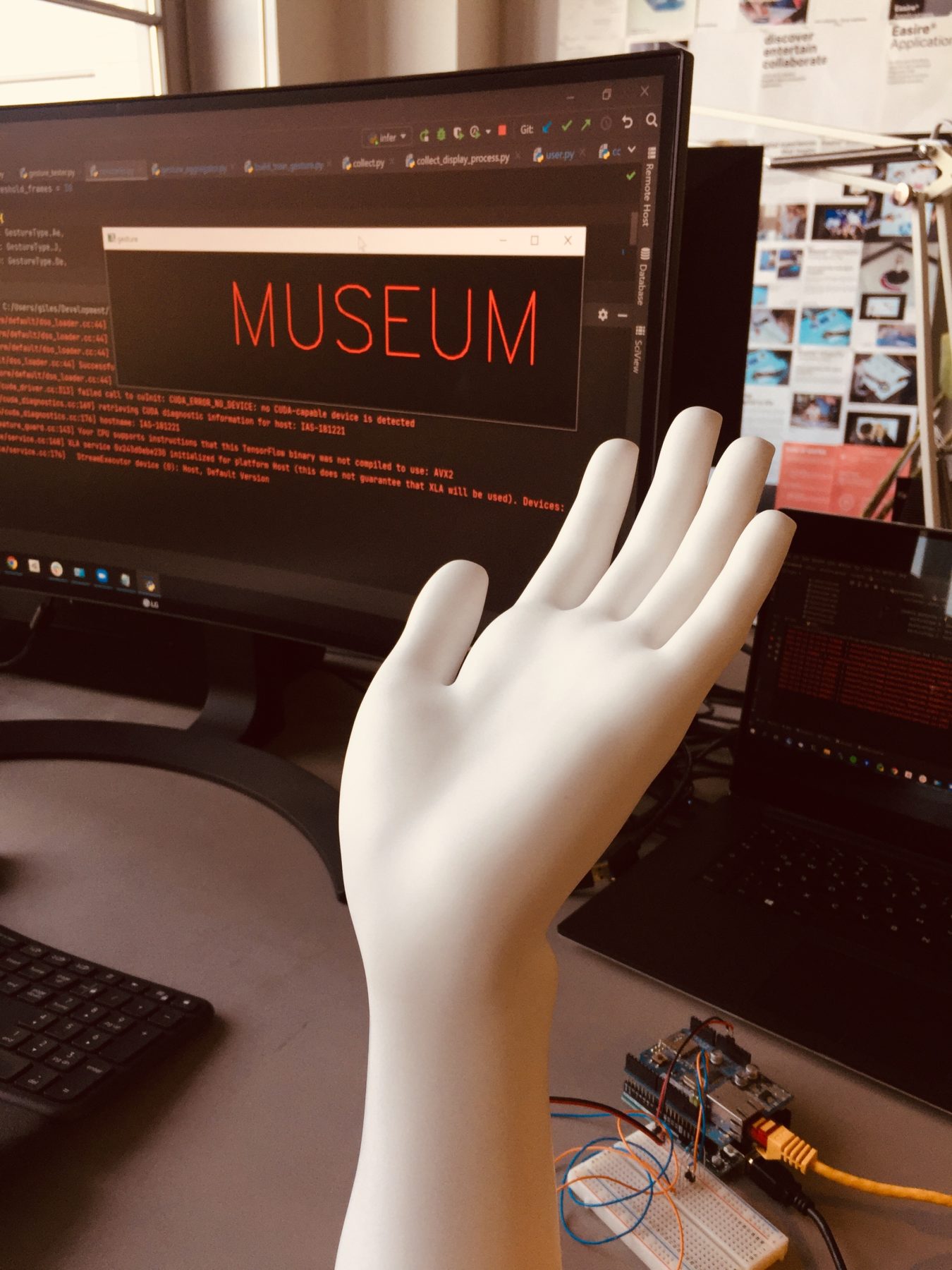

A simple AI does not draw the data from individual touch points, but from internal sensor fields, which thus also make movement measurable.

Integrated into almost any object, the sensors recognise various gestures – provided they are taught.

This requires around 100 repetitions of a simple gesture, which are linked in TEACH mode with a position on the object and the desired information. Just as your smartphone recognises your fingerprint after you have put it on several times from different positions, the object “knows” where it is touched and how.

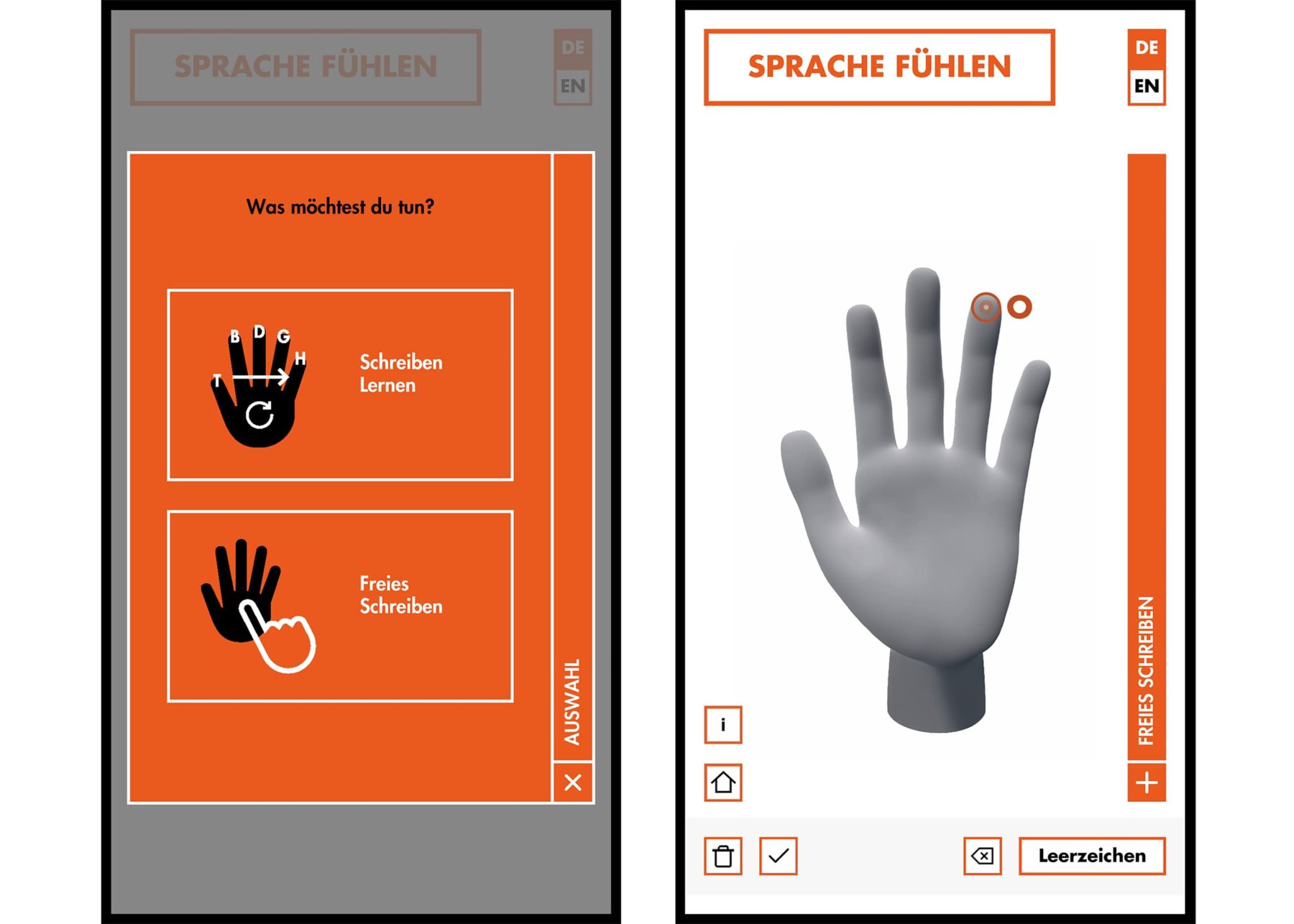

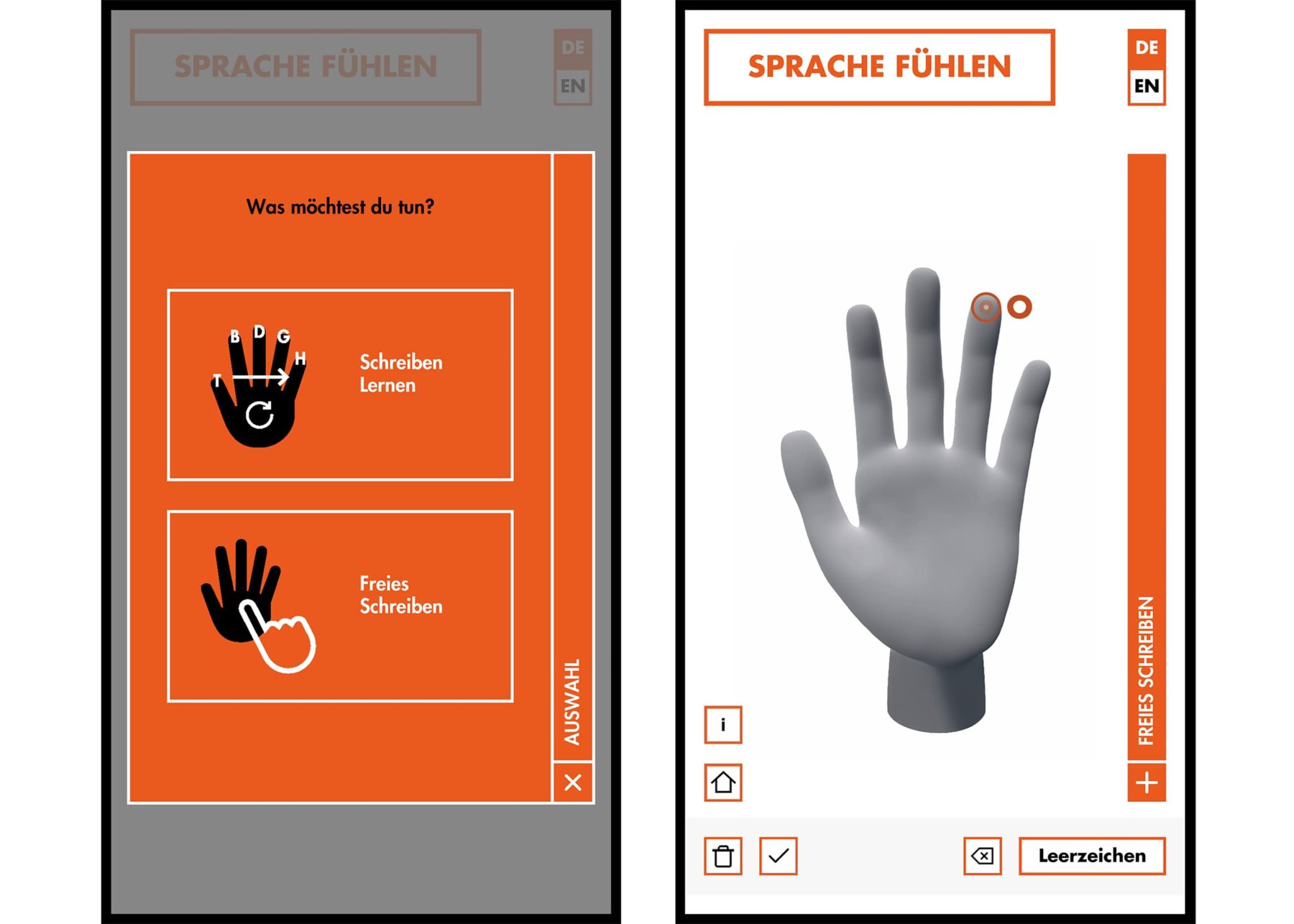

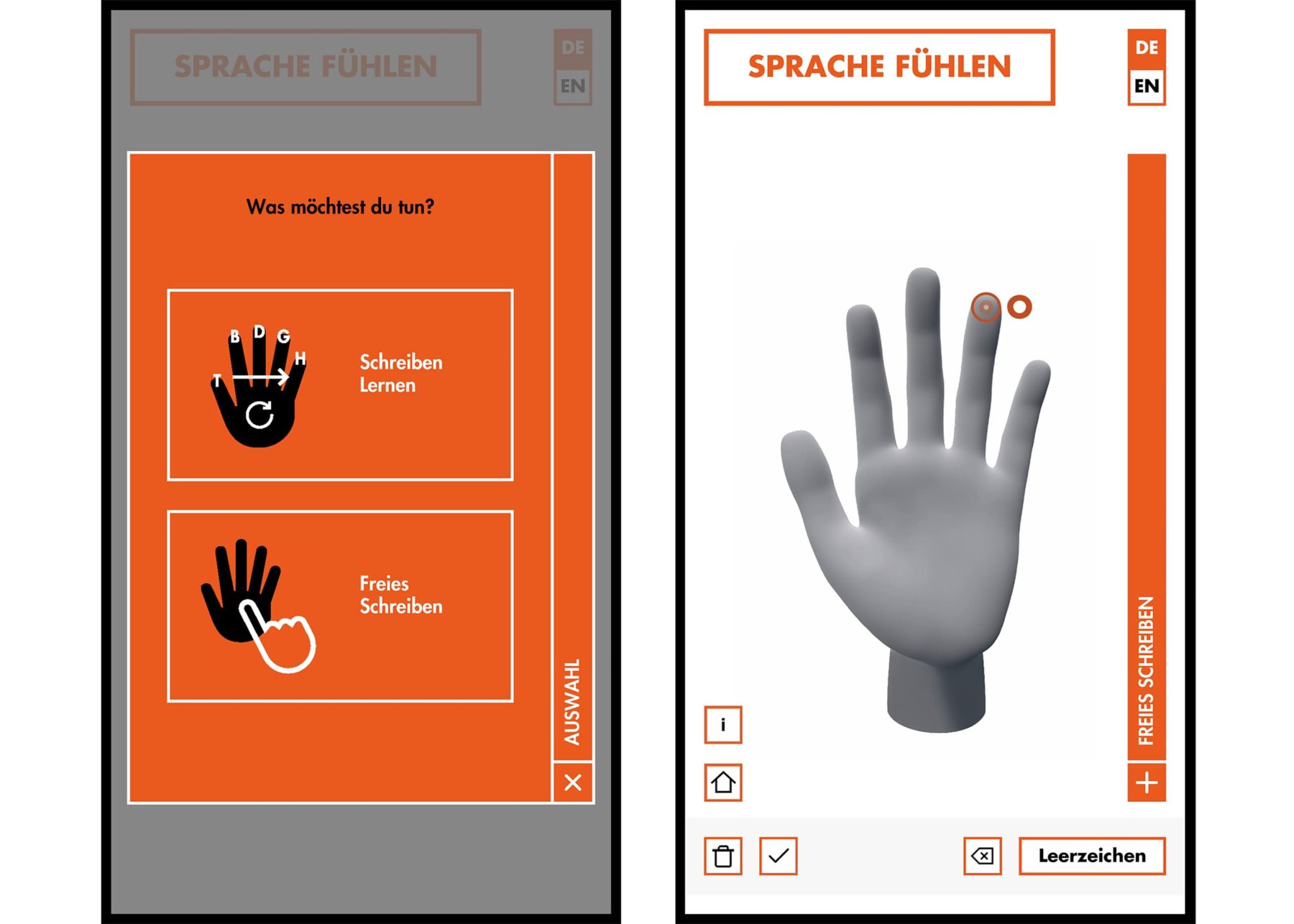

Visitors to the Deutsches Museum Nürnberg learn about the Lorm alphabet in two modes and see on a screen what they write.

In the ‘Learn to write’ mode, a word is copied using gestures, while in the ‘Free writing’ mode, the participants are allowed to try things out.

To enable this, each of the letters had to be ‘learned’ by the sensory system. In TEACH mode, werk5 staff repeated the Lorm-ABC gestures until the gesture library was complete. Via the content management system (CMS), museum staff can also train the exhibit in TEACH mode to further increase the responsiveness and precision of the gesture recognition

Which mode is more popular? Do people want to be guided or write themselves? Are some areas particularly interesting or are they neglected?

The obtained data can be collected anonymously and read out via the CMS. The museum staff can then independently update the linked information via the CMS. Even more, the gesture library can be expanded indefinitely: the sensor system can learn new gestures in TEACH mode. In this way, the exhibit remains adaptable and can also play a role in other exhibitions and contexts.